When you visit a website, your browser sends multiple requests to the same server to fetch resources like images, scripts, and stylesheets. (When you download an html file, it contains <link>, <script>, <img>, <iframe>, <video>, <audio> tags that point to other resources.) Establishing a new connection for each request can be costly in terms of time and resources. This is where Connection: keep-alive and advancements like HTTP/2 come into play, optimizing how browsers and servers communicate. In this blog, we’ll explore what Connection: keep-alive does, the differences between HTTP/1.1 and HTTP/2, and how these protocols impact web performance. Let’s dive in!

What is Connection: Keep-Alive?

Connection: keep-alive is an HTTP header introduced in HTTP/1.1 to allow a single TCP connection to remain open for multiple requests and responses. In the older HTTP/1.0, each request required a new connection, which involved a three-way TCP handshake, increasing latency and server load.

With keep-alive:

- The browser and server agree to reuse the same connection for subsequent requests.

- This reduces latency by avoiding repeated handshakes.

- It’s especially useful for websites with multiple resources (e.g., images, CSS, JavaScript files).

For example, when loading a webpage, keep-alive ensures that all resources are fetched over a single connection, making the process faster and more efficient.

HTTP/1.0 vs HTTP/1.1: A Step Forward

To understand the role of keep-alive, let’s compare HTTP/1.0 and HTTP/1.1:

-

HTTP/1.0:

- Each request opens a new TCP connection.

- No persistent connections by default, leading to higher latency.

- Limited support for modern web needs, like streaming or large file transfers.

-

HTTP/1.1:

- Introduced Connection: keep-alive as the default behavior (unless explicitly closed with

Connection: close). - Supports pipelining, allowing multiple requests to be sent without waiting for responses (though responses must arrive in order).

- Improved support for caching, compression, and chunked transfers.

- Introduced Connection: keep-alive as the default behavior (unless explicitly closed with

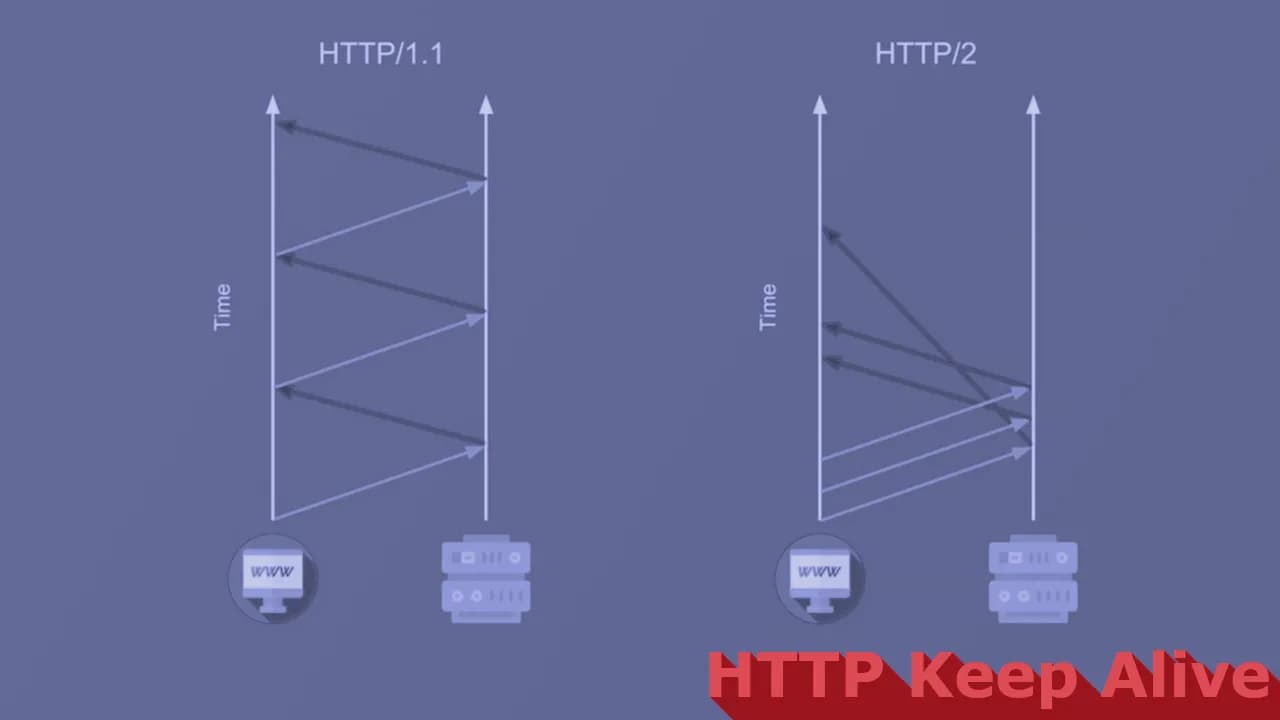

While HTTP/1.1 was a significant improvement, it still had limitations, such as head-of-line blocking, where a slow response delays subsequent requests in the pipeline. This set the stage for HTTP/2.

HTTP/2 and Multiplexing: A Game Changer

HTTP/2, introduced in 2015, revolutionized web performance by addressing HTTP/1.1’s shortcomings. One of its standout features is multiplexing, which allows multiple requests and responses to be sent simultaneously over a single TCP connection.

Key features of HTTP/2:

- Multiplexing: Unlike HTTP/1.1’s pipelining, HTTP/2 interleaves multiple streams of requests and responses, eliminating head-of-line blocking.

- Binary Framing: HTTP/2 uses a binary protocol instead of text, making it faster and more efficient for machines to process.

- Header Compression (HPACK): Reduces overhead by compressing HTTP headers.

- Server Push: Servers can proactively send resources (e.g., CSS files) before the browser requests them.

- Prioritization: Allows browsers to specify which resources are more important, optimizing loading order.

With HTTP/2, keep-alive is still relevant but less critical because multiplexing maximizes connection efficiency. A single HTTP/2 connection can handle hundreds of streams concurrently, making web pages load faster.

Performance Comparison: HTTP/1.1 vs HTTP/2

Let’s break down the performance differences:

-

Latency:

- HTTP/1.1: Keep-alive reduces latency by reusing connections, but head-of-line blocking can cause delays.

- HTTP/2: Multiplexing and prioritization minimize delays, even for complex websites.

-

Resource Usage:

- HTTP/1.1: Multiple connections may still be needed for parallel resource loading, increasing server load.

- HTTP/2: A single connection handles all requests, reducing server overhead.

-

Real-World Impact:

- On high-latency networks (e.g., mobile), HTTP/2’s efficiency shines.

- For websites with many resources, HTTP/2 can reduce page load times by up to 20-30% compared to HTTP/1.1.

CDN and HTTP/2: A Perfect Match

Content Delivery Networks (CDNs) like Cloudflare or AWS CloudFront benefit significantly from HTTP/2. CDNs cache resources closer to users, reducing latency. When paired with HTTP/2:

- Multiplexing ensures faster delivery of cached resources.

- Header compression reduces bandwidth usage.

- Server push allows CDNs to preload critical assets, improving performance.

Most modern CDNs support HTTP/2 by default, making it a no-brainer for websites aiming to optimize speed and SEO.

Real-World Example: Observing the Difference with Chrome DevTools

Want to see the difference between HTTP/1.1 and HTTP/2 in action? Use Chrome DevTools:

-

Open Chrome and navigate to a website (e.g., one using HTTP/2, like

https://www.cloudflare.com). - Press Ctrl+Shift+I or ⌘+⌥+I to open DevTools and go to the Network tab.

- Reload the page and check the Protocol column in the resource list:

-

You’ll see

h2for HTTP/2 orhttp/1.1for HTTP/1.1.

- Look at the Waterfall view to analyze request timing:

- HTTP/1.1 may show sequential loading with gaps due to head-of-line blocking.

- HTTP/2 will show concurrent resource loading, with fewer delays.

Try comparing an HTTP/1.1 site (if you can find one!) with an HTTP/2 site to visualize the performance boost.

Conclusion

Connection: keep-alive was a game-changer for HTTP/1.1, enabling persistent connections and reducing latency. However, HTTP/2 takes performance to the next level with multiplexing, header compression, and server push. For modern websites, especially those using CDNs, HTTP/2 is the gold standard, delivering faster load times and better user experiences.

By understanding these protocols, you can optimize your website for speed and SEO. Next time you’re debugging performance issues, fire up Chrome DevTools and see how HTTP/2 is transforming the web!

Album of the day: